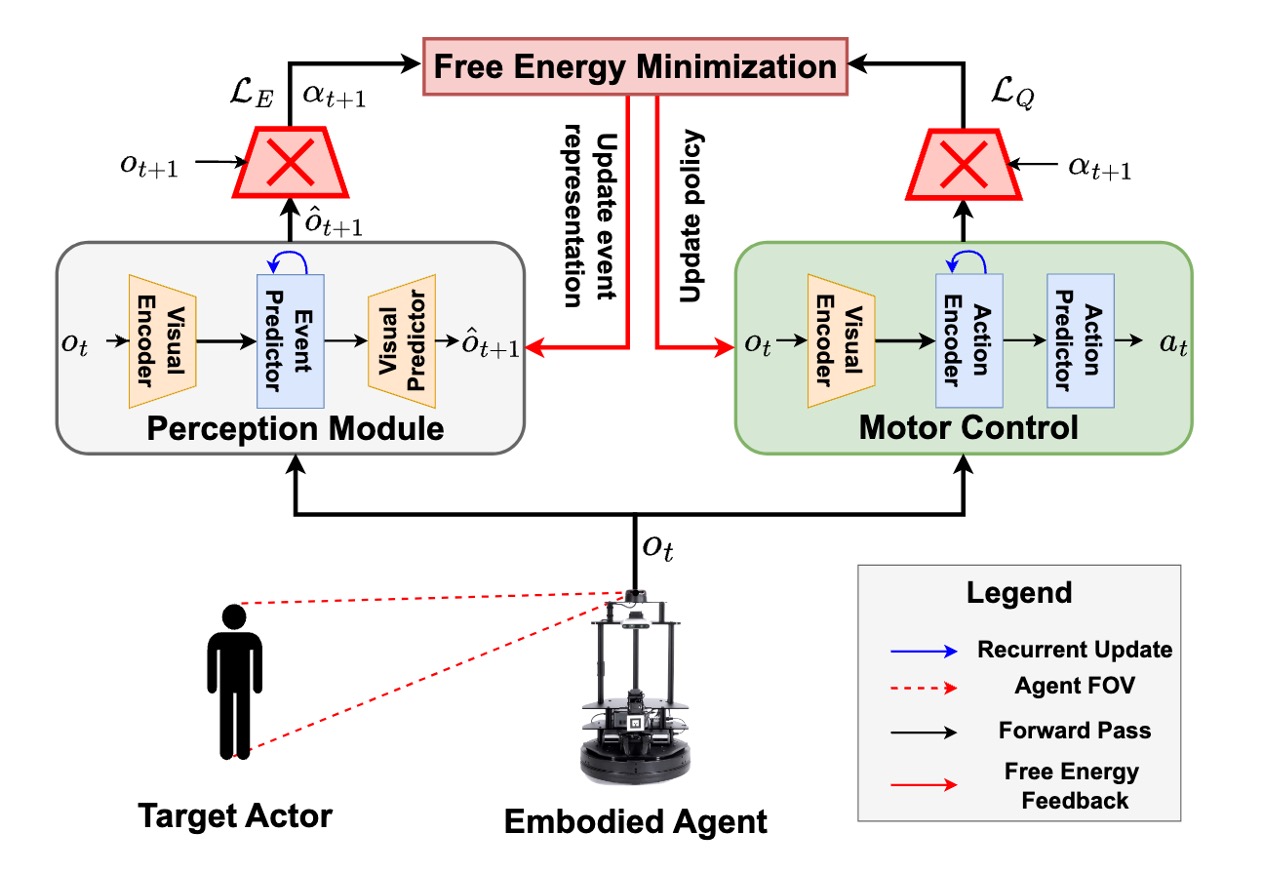

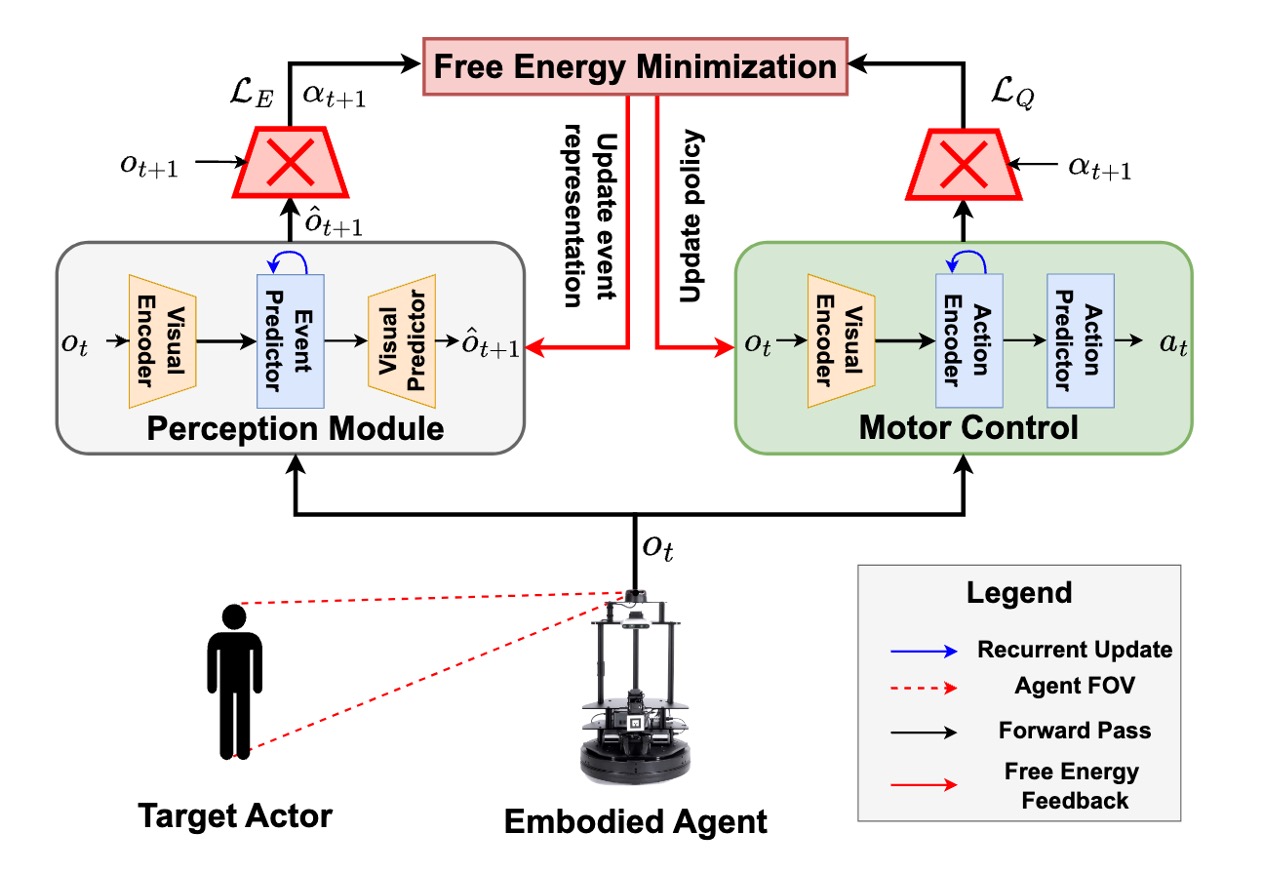

Overall Architecture

Active event perception — the ability to dynamically detect, track, and summarize events in real time — is essential for embodied intelligence for human-AI collaboration but is often constrained by reliance on predefined action spaces, annotated datasets, and extrinsic rewards. However, existing approaches often rely on predefined action spaces, annotated datasets, and extrinsic rewards, limiting their adaptability and scalability in real-world scenarios. Inspired by cognitive theories of event perception and predictive coding, we propose EASE, a novel framework for active event perception that unifies spatiotemporal representation learning and embodied control through free energy minimization. EASE operates in a fully self-supervised manner, leveraging prediction errors and entropy as intrinsic signals to segment events, summarize observations, and actively track salient actors. By coupling a generative perception model with an action-driven control policy, EASE dynamically aligns predictions with observations, enabling emergent behaviors such as implicit memory, target continuity, and adaptability to novel environments. Through extensive evaluations in both simulation and real-world settings, we demonstrate EASE's ability to balance fine-grained event sensitivity with robust motor control, achieving privacy-preserving and scalable event perception.

BibTex Code Here